0.1.1. VMware 설치

https://www.vmware.com/products/workstation-pro/workstation-pro-evaluation.html

Download VMware Workstation Pro

VMware Workstation Pro is the industry standard desktop hypervisor for running virtual machines on Linux or Windows PCs. Discover why.

www.vmware.com

0.1.2. 가상머신 실행

master/worker 똑같이 진행

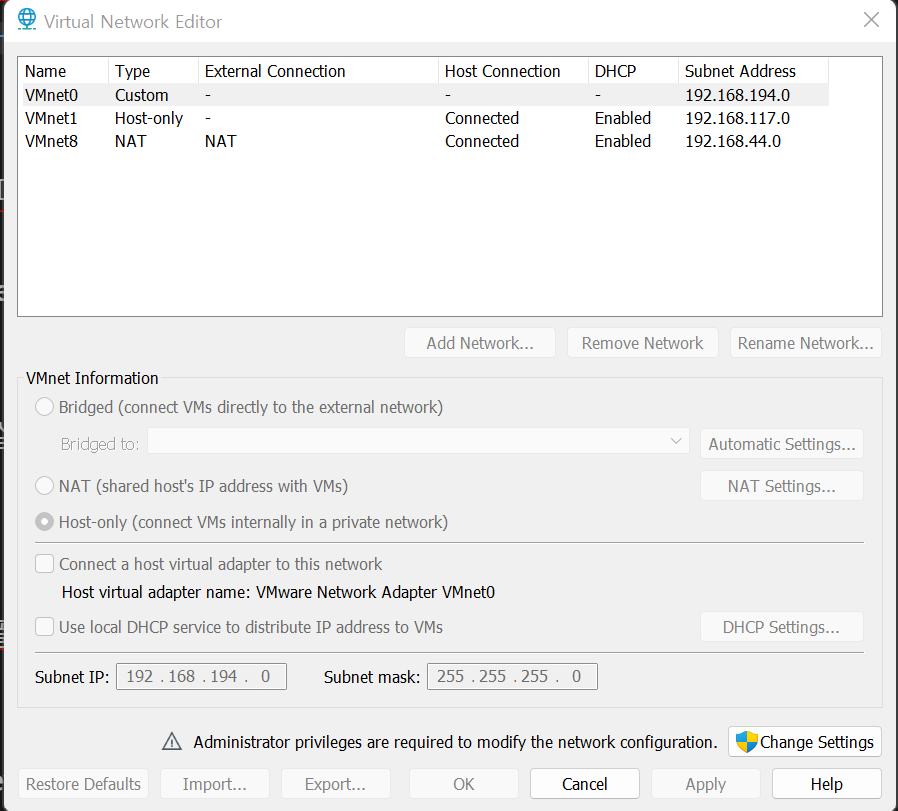

0.1.2.1. bridge network

0.1.2.2. 머신 실행

이미지는 ubuntu 22.04 LTS 를 다운로드

single storage 선택

network adapter 에서 bridged 선택

필요없는 i/o device 제거

| master1 | 4 | 8 |

| master2 | 2 | 4 |

| master3 | 2 | 4 |

| worker | 2 | 4 |

이후 OS 설치 후 재시작

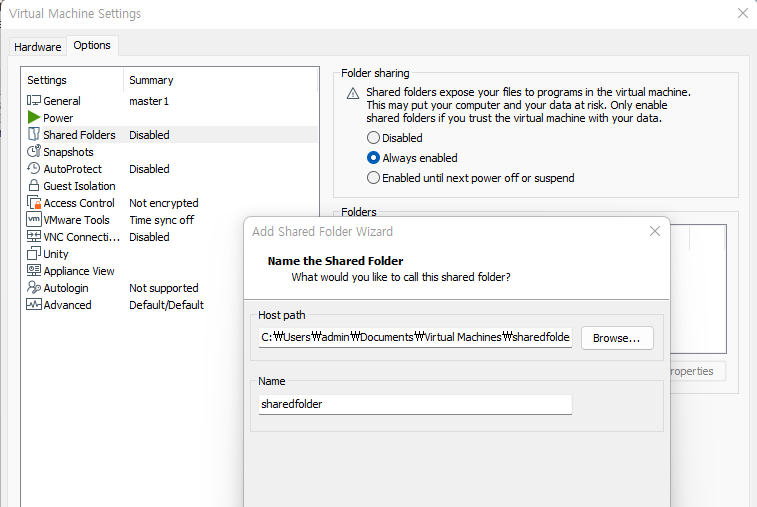

0.1.2.3. 공유폴더

디렉토리 하나 만들어주고

재부팅이 끝나면 vm console 에서 share folder 활성화

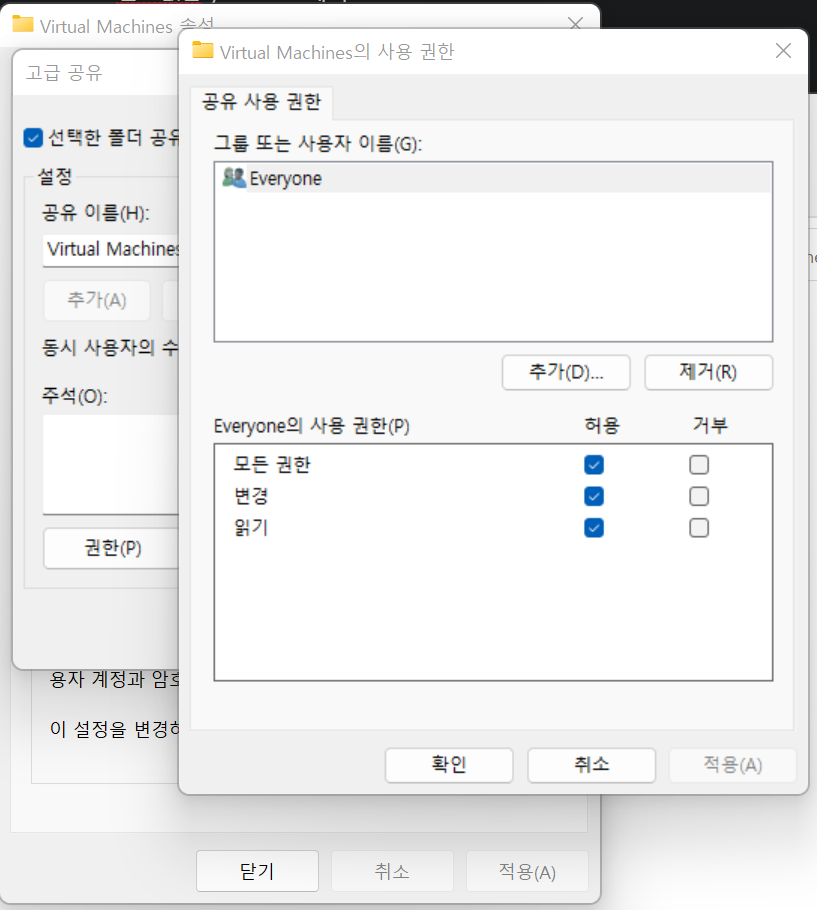

share folder 공유 권한 설정

그리고 VM console 에서 VMware Tool 을 받아줘야하는데 본인은 해당 버튼이 비활성화되어있어서 apt 로 받았음

<bash />

$sudo apt update

$sudo apt install open-vm-tools

$sudo apt install open-vm-tools-desktop

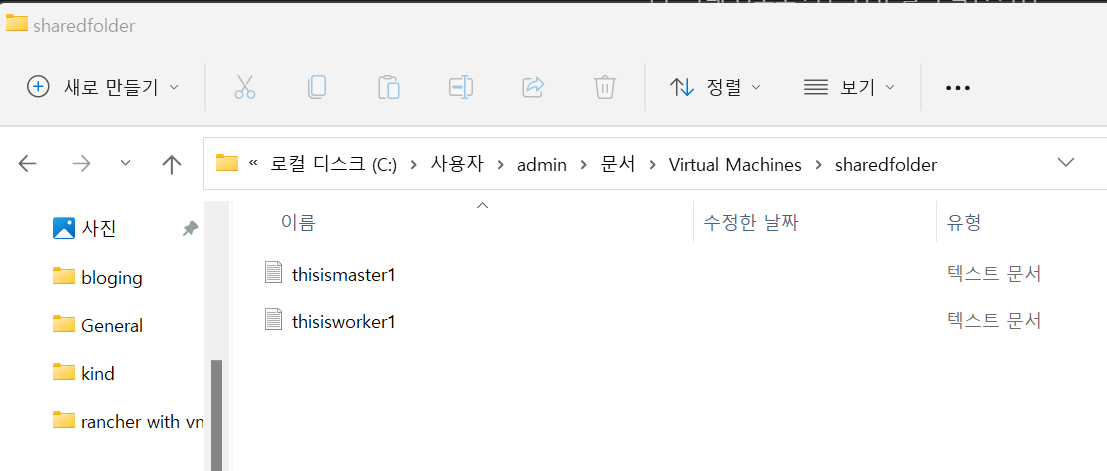

이후 아래 경로로 가면 공유 폴더 확인 가능

<bash />/mnt/hgfs/

테스트

<bash />master1@master1-virtual-machine:/mnt/hgfs/sharedfolder$ touch thisismaster1.txt master1@master1-virtual-machine:/mnt/hgfs/sharedfolder$

<bash />worker1@worker1-virtual-machine:/mnt/hgfs/sharedfolder$ touch thisisworker1.txt worker1@worker1-virtual-machine:/mnt/hgfs/sharedfolder$

0.1.2.4. ssh 서버 설치

<bash />sudo apt install openssh-server ip addr

0.1.2.5. localhost 에서 가상머신으로 ssh 접속

<bash />ssh [머신사용자명]@[머신ip]

0.1.3. RKE2 - k8s 클러스터 구축

apt 는 upgrade, update 한 상태

0.1.3.1. server node (master-1)

스왑, 방화벽 해제

<bash />

server@master-1:~$ sudo su -

[sudo] password for server:

root@master-1:~# systemctl stop ufw && ufw disable && iptables -F

Firewall stopped and disabled on system startup

root@master-1:~# swapoff -a

root@master-1:~#

0.1.3.2. RKE2 다운로드, 활성화

<bash />

root@master-1:~# apt install curl

root@master-1:~# curl -sfL https://get.rke2.io | INSTALL_RKE2_TYPE="server" sh -

[INFO] finding release for channel stable

[INFO] using v1.24.12+rke2r1 as release

[INFO] downloading checksums at https://github.com/rancher/rke2/releases/download/v1.24.12+rke2r1/sha256sum-amd64.txt

[INFO] downloading tarball at https://github.com/rancher/rke2/releases/download/v1.24.12+rke2r1/rke2.linux-amd64.tar.gz

[INFO] verifying tarball

[INFO] unpacking tarball file to /usr/local

root@master-1:~# systemctl enable rke2-server.service

Created symlink /etc/systemd/system/multi-user.target.wants/rke2-server.service → /usr/local/lib/systemd/system/rke2-server.service.

CNI

<bash />

root@master-1:~# apt install vim -y

root@master-1:~# mkdir -p /etc/rancher/rke2

root@master-1:~# vim /etc/rancher/rke2/config.yaml

cni: "calico"

SAN

<bash />

root@master-1:~# apt install vim -y

root@master-1:~# mkdir -p /etc/rancher/rke2

root@master-1:~# vim /etc/rancher/rke2/config.yaml

cni: "calico"

임의값으로 설정했다.

rke2-server 서비스 시작, SAN 값 확인

<bash />

root@master-1:~# systemctl start rke2-server.service

root@master-1:~# openssl x509 -in /var/lib/rancher/rke2/server/tls/serving-kube-apiserver.crt -text

Certificate:

Data:

Version: 3 (0x2)

Serial Number: 3009893080140855171 (0x29c549cf2db3b783)

Signature Algorithm: ecdsa-with-SHA256

Issuer: CN = rke2-server-ca@1682035894

Validity

Not Before: Apr 21 00:11:34 2023 GMT

Not After : Apr 20 00:11:34 2024 GMT

Subject: CN = kube-apiserver

Subject Public Key Info:

Public Key Algorithm: id-ecPublicKey

Public-Key: (256 bit)

pub:

04:72:a8:bf:6f:5b:d6:e8:84:87:6a:7d:d1:da:3e:

0a:21:dc:a7:25:fd:be:95:05:a6:2b:f8:06:85:23:

09:87:87:3d:d7:f6:e7:02:33:00:17:d7:6e:2c:80:

13:b3:7a:5f:a8:cc:3b:65:9b:65:2e:d0:52:b3:5f:

c5:83:71:85:d1

ASN1 OID: prime256v1

NIST CURVE: P-256

X509v3 extensions:

X509v3 Key Usage: critical

Digital Signature, Key Encipherment

X509v3 Extended Key Usage:

TLS Web Server Authentication

X509v3 Authority Key Identifier:

FF:A9:4A:96:C9:05:B9:D8:32:A4:01:A4:E1:7A:4D:95:DD:53:1F:B0

X509v3 Subject Alternative Name:

DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster.local, DNS:my-kubernetes-domain.com, DNS:another-kubernetes-domain.com, DNS:localhost, DNS:master-1, IP Address:127.0.0.1, IP Address:0:0:0:0:0:0:0:1, IP Address:192.168.31.175, IP Address:10.43.0.1

설치 확인

<bash />

# systemctl status rke2-server.service

# journalctl -u rke2-server -f

Jul 07 08:39:16 rke2-1 rke2[4327]: I0707 08:39:16.178631 4327 event.go:291]

"Event occurred" object="rke2-1" kind="Node" apiVersion="v1" type="Normal" reason="Synced" message="Node synced successfully"

‘Node synced successfully’ 가 나오면 성공이라고 하는데 설치당시 kind='Node' 항목을 확인할 수는 없었다.

kubeconfig 환경설정

<bash />

root@master-1:~# mkdir ~/.kube/

root@master-1:~# cp /etc/rancher/rke2/rke2.yaml ~/.kube/config

root@master-1:~# export PATH=$PATH:/var/lib/rancher/rke2/bin/

root@master-1:~# echo 'export PATH=/usr/local/bin:/var/lib/rancher/rke2/bin:$PATH' >> ~/.bashrc

root@master-1:~# echo 'source <(kubectl completion bash)' >>~/.bashrc

root@master-1:~# echo 'alias k=kubectl' >>~/.bashrc

root@master-1:~# echo 'complete -F __start_kubectl k' >>~/.bashrc

root@master-1:~#

Server Token 확인 , 노드 확인

<bash />

root@master-1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

master-1 Ready control-plane,etcd,master 11m v1.24.12+rke2r1

root@master-1:~#

root@master-1:~# kubectl get ns calico-system

NAME STATUS AGE

calico-system Active 10m

root@master-1:~#

root@master-1:~# kubectl get deploy -n calico-system

NAME READY UP-TO-DATE AVAILABLE AGE

calico-kube-controllers 1/1 1 1 10m

calico-typha 1/1 1 1 10m

root@master-1:~# kubectl get ds -n calico-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

calico-node 1 1 1 1 1 kubernetes.io/os=linux 10m

root@master-1:~#

root@master-1:~# cat /var/lib/rancher/rke2/server/node-token

0.1.3.3. server node (master-2,3)

비슷한 방식으로 추가해주자

본인은 클라우드 환경에서만 클러스터를 구축해봤는데 vmware 로 진행할 시 컴퓨터 사양이 좋지 않다면 master node 2,3 를 동시에 서비스를 시작할 때 충돌이 일어났다.

완벽히 master2 가 Ready 인것을 확인하고 master3 를 연결하였다.

<bash />

swapoff -a

systemctl stop ufw && ufw disable && iptables -F

curl -sfL https://get.rke2.io | INSTALL_RKE2_TYPE="server" sh -

systemctl enable rke2-server.service

mkdir -p /etc/rancher/rke2/

apt install vim -y

vim /etc/rancher/rke2/config.yaml

server: https://192.168.31.175:9345

token: K109c6c7bf5d0cddb69d73c84449e06bab1691392e315b7e29a043076369e31123c::server:0c1393818e37f802cb5daac78d68e517

tls-san:

- my-kubernetes-domain.com

- another-kubernetes-domain.com

systemctl start rke2-server.servic

0.1.3.4. worker node

<bash />

root@worker:~# swapoff -a

root@worker:~# systemctl stop ufw && ufw disable && iptables -F

Firewall stopped and disabled on system startup

root@worker:~#

root@worker:~# apt install curl

root@worker:~# curl -sfL https://get.rke2.io | INSTALL_RKE2_TYPE="agent" sh -

root@worker:~# mkdir -p /etc/rancher/rke2/

root@worker:~# vim /etc/rancher/rke2/config.yaml

server: https://192.168.31.175:9345

token: K109c6c7bf5d0cddb69d73c84449e06bab1691...

root@worker:~# systemctl enable rke2-agent.service

Created symlink /etc/systemd/system/multi-user.target.wants/rke2-agent.service → /usr/local/lib/systemd/system/rke2-agent.service.

root@worker:~# systemctl start rke2-agent.service

root@worker:~# export PATH=$PATH:/var/lib/rancher/rke2/bin/

root@worker:~# mkdir ~/.kube

root@worker:~# mv /var/lib/rancher/rke2/agent/kubelet.kubeconfig ~/.kube/config

워커노드는 더 간단하다.

0.1.3.5. kube-bench

클러스터 검사

<bash />

root@master-1:~# vim kubebench.yaml

root@master-1:~#

root@master-1:~# kubectl apply -f kubebench.yaml

job.batch/kube-bench created

root@master-1:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

kube-bench-j6tpt 1/1 Running 0 9s

root@master-1:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

kube-bench-j6tpt 0/1 Completed 0 27s

kubebench.yaml

kubebench.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: kube-bench

spec:

template:

metadata:

labels:

app: kube-bench

spec:

hostPID: true

containers:

- name: kube-bench

image: aquasec/kube-bench:v0.6.8

command: ["kube-bench"]

volumeMounts:

- name: var-lib-etcd

mountPath: /var/lib/etcd

readOnly: true

- name: var-lib-kubelet

mountPath: /var/lib/kubelet

readOnly: true

- name: var-lib-kube-scheduler

mountPath: /var/lib/kube-scheduler

readOnly: true

- name: var-lib-kube-controller-manager

mountPath: /var/lib/kube-controller-manager

readOnly: true

- name: etc-systemd

mountPath: /etc/systemd

readOnly: true

- name: lib-systemd

mountPath: /lib/systemd/

readOnly: true

- name: srv-kubernetes

mountPath: /srv/kubernetes/

readOnly: true

- name: etc-kubernetes

mountPath: /etc/kubernetes

readOnly: true

# /usr/local/mount-from-host/bin is mounted to access kubectl / kubelet, for auto-detecting the Kubernetes version.

# You can omit this mount if you specify --version as part of the command.

- name: usr-bin

mountPath: /usr/local/mount-from-host/bin

readOnly: true

- name: etc-cni-netd

mountPath: /etc/cni/net.d/

readOnly: true

- name: opt-cni-bin

mountPath: /opt/cni/bin/

readOnly: true

restartPolicy: Never

volumes:

- name: var-lib-etcd

hostPath:

path: "/var/lib/etcd"

- name: var-lib-kubelet

hostPath:

path: "/var/lib/kubelet"

- name: var-lib-kube-scheduler

hostPath:

path: "/var/lib/kube-scheduler"

- name: var-lib-kube-controller-manager

hostPath:

path: "/var/lib/kube-controller-manager"

- name: etc-systemd

hostPath:

path: "/etc/systemd"

- name: lib-systemd

hostPath:

path: "/lib/systemd"

- name: srv-kubernetes

hostPath:

path: "/srv/kubernetes"

- name: etc-kubernetes

hostPath:

path: "/etc/kubernetes"

- name: usr-bin

hostPath:

path: "/usr/bin"

- name: etc-cni-netd

hostPath:

path: "/etc/cni/net.d/"

- name: opt-cni-bin

hostPath:

path: "/opt/cni/bin/"

kubebench-output.log

워커노드 보안구성과 클러스터 정책에서 fail 과 warn 이 확인되었다.

0.1.4. Cert-Manager, HELM, Rancher 설치

cert-manager : 인증서 관리에 사용되는 컨트롤러

<bash />kubectl apply -f https://github.com/jetstack/cert-manager/releases/download/v1.5.4/cert-manager.yaml kubectl -n cert-manager rollout status deploy/cert-manager kubectl -n cert-manager rollout status deploy/cert-manager-webhook kubectl get pods --namespace cert-manager

HELM 설치

<bash />curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 chmod 700 get_helm.sh ./get_helm.sh

Rancher UI 배포

<bash />

kubectl create namespace cattle-system

helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

helm repo update

helm search repo rancher-stable

helm install rancher rancher-stable/rancher --namespace cattle-system --set hostname=192.168.31.175.nip.io --set replicas=1

여기까지 진행한 후 deployment 3개가 ready 상태가 되고 hostname 으로 rancher ui 에 접속할 수 있지만

본인은 계속해서 최대 2개까지만 ready 상태가 되고, pending 상태의 pod 가 containerstatusunknown 상태가 되어 계속해서 pod 를 만들어내는 상태에 있게 되었다.

혹은 cert-manager, cattle-system 리소스들이 Evicted 되고 Error 가 나서 계속 재설치 했었는데

알고보니 vmware 성능이 부족해서 생기는 문제라고 한다.

t3a.medium 급 instance 에서 실습을 한번 더 진행해볼 예정이다.

1. 참고

https://mingyum119.tistory.com/101

https://osc-korea.atlassian.net/wiki/spaces/OP/pages/618070035/RKE2#2.2-CNI-(Calico)

https://docs.rke2.io/install/quickstart#server-node-installation

https://m.blog.naver.com/onlywin7788/221845944242

https://docs.rke2.io/install/ha#5-optional-join-agent-nodes

https://fabxoe.tistory.com/130

https://tommypagy.tistory.com/440

'Kubernetes > Rancher' 카테고리의 다른 글

| [k8s-Rancher] 4. AWS EC2로 RKE2 k8s 클러스터 구축 (2) | 2023.05.16 |

|---|---|

| Minikube Cluster with Rancher UI (0) | 2023.05.02 |

| [k8s-Rancher] 2. Rancher desktop (0) | 2023.04.23 |

| [Rancher] 1. Rancher 이해 (0) | 2023.04.18 |

| Rancher 시작하기 (1) | 2023.04.17 |